Co-advisor: Dr. Guy Austern

The rapid transition towards a localized economy has led to the increasing accessibility of 3D printing technology for home use, empowering individuals to craft custom items. However, the process remains constrained by the necessity for specialized 3D modeling and design skills, limiting the ability to design for 3D printing to only those with such expertise. This research seeks to address this gap by harnessing the potential of machine learning (ML) to automatically generate 3D-printable objects based on textual descriptions, thus reducing the need for advanced design proficiency.

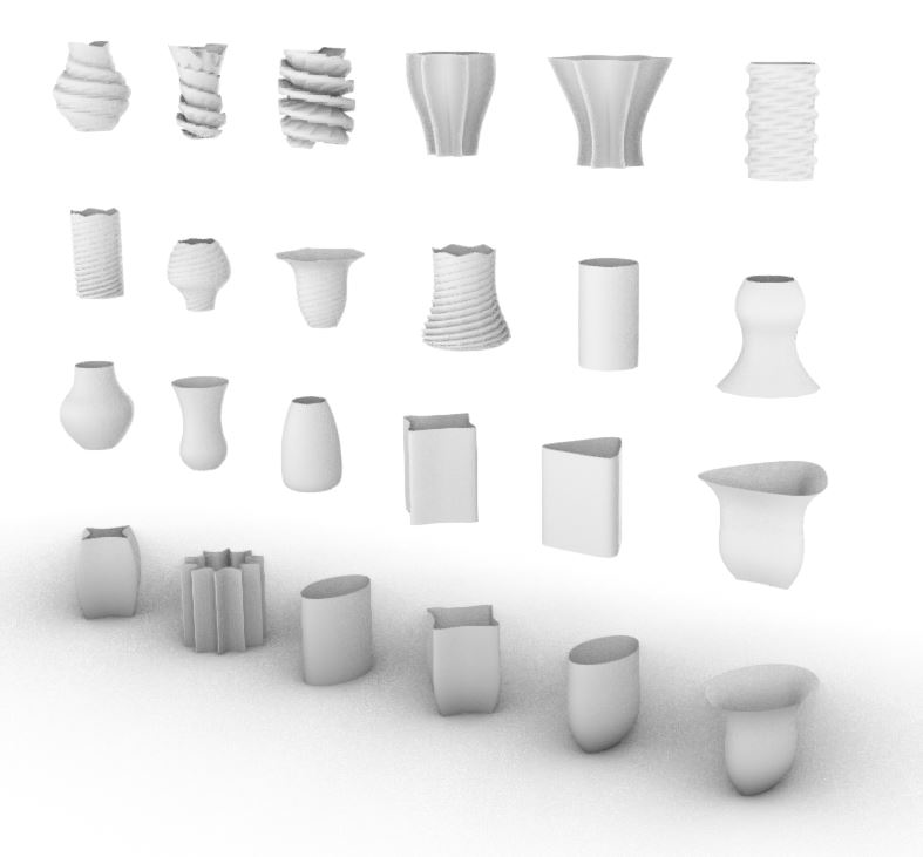

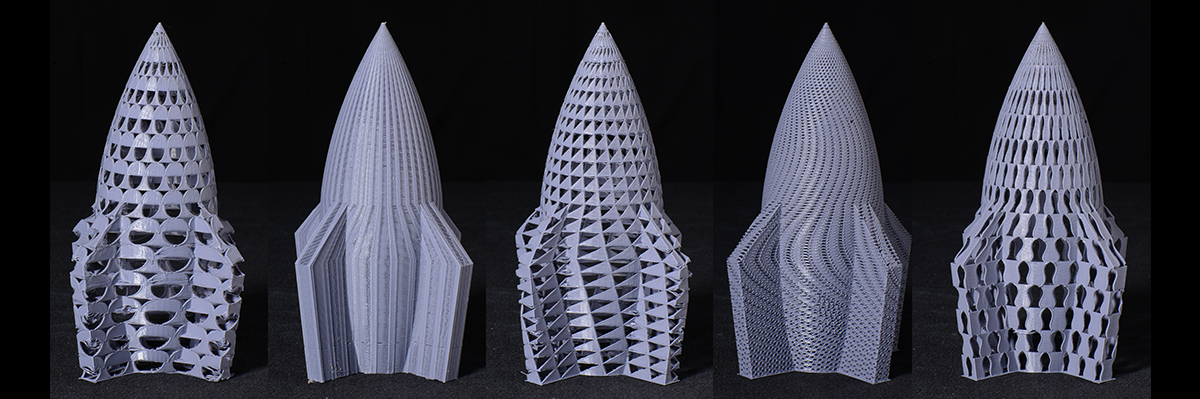

Existing methods for generating 3D shapes from textual prompts still face challenges related to specificity, ambiguity handling, lack of fine details, computational complexity, inability to incorporate constraints, and unpredictability in the outputs. To overcome these challenges, this research advocates using ML to generate code that automatically creates parametric 3D shapes, offering users fine-grained control over their designs. We will train an existing text-to-code ML model to generate code from user prompts, which will describe the 3D objects in a programming language such as python. Then, this code will be translated into a printable 3D model. The long-term objective of this work is to enable a future where individuals can effortlessly design and print functional objects without prior modeling knowledge, ultimately reshaping a landscape of accessible, personalized manufacturing.